Limits on artificial thinking

Generative AI may seem creative, but like us it's poor at reasoning when things get too complex

Constraints are an important factor in thinking about the future. But some constraints are wilfully or ignorantly ignored, especially in discussions about technologies.

There is no denying that generative artificial intelligence has made rapid progress in just a few years, with the pace of adoption extremely quick. But adoption does not mean lasting utility, or continued exponential progress. Unsurprisingly, given the early stage of development, many companies are still struggling to find out what it can do for them. A recent McKinsey survey found 80% of respondents had yet to see a tangible economic benefit from adopting generative AI. Another survey reported that a third of companies found integrating AI agents into their workflow “extremely challenging.”

Some extolling the potential of artificial intelligence find it difficult to perceive or acknowledge limits to the technology and its applications, or expect challenges can be quickly overcome. Sam Altman, the CE of OpenAI, believes we have already entered the era of “super-intelligence”, with “novel insights” from AI on the cusp of emerging.

Demis Hassabis, Google DeepMind’s CE, thinks AI will let us eventually “colonise the galaxy”, starting in the next five years.

The CE of Anthropic believes “powerful AI”, which he defines as “smarter than a Nobel Prize winner”, will emerge next year. These techno-utopian visions will lead, they proclaim, to dramatic acceleration in research and development, be crucial in solving economic, social, and environmental problems, and improve liberal democracy.

These views are I think a combination of uncritical techno-optimism, political influencing, and calls for investment. Despite many leaders in the AI field just a few years back calling for a “pause on giant AI experiments”, that caution has been abandoned. Some of those signatories are seeking, with some success, minimal regulatory oversight in the US, so they can dominate AI developments and the economic benefits they hope will follow. Lobbying for bans on AI regulation there have failed, so far, but the US has a much more permissive regulatory environment for AI than many other countries.

AI is a very hungry beast. Not just for data, but also investment and regulatory leniency, so boosterism and hype can be a core economic and political strategy of AI companies. One commentator has compared the current hype about AI to the blockchain boosterism seen nearly a decade ago. Irrational exuberance followed soon after by disillusionment.

Limits of artificial intelligence tools

Generative and other types of AI have proven very effective for a variety of tasks, but generative AI is often unreliable . So, a Gung Ho approach is not justified. Recent research, for example, indicates that current AI therapists are not safe and should not be used as replacements for human therapists at this point in time.

A decade ago, some AI proponents were confidently predicting that AI would replace radiologists in just a few years. That hasn’t happened. Instead, radiologists are more in demand as other technologies drive a data boon that makes their tasks more complicated and demanding. Complementarity rather than replacement is how medical use of AI is evolving.

Humans and AI have different biases, so combining radiological applications of AI with radiologists’ skills is improving diagnostic accuracy. And AI is automating routine tasks like report writing, allowing radiologists focus on more complex issues. There is also what Professor Eric Topol calls a shift from “unimodal” to “multi-modal” use of AI in medicine, which involves moving from AI analysing a single data set to analysis and integration of a suite of patient data.

However, doctors and medical researchers are concerned that oversight and regulation of medical AI tools lags way behind their pace of adoption. Regulatory standards for AI tools in medicine tend to be less rigorous than for other medical devices and therapies, and the AI tools keep evolving once approved.

Guessing usurps logic when things get too complex

AI optimists often assume that since a range of relatively straight-forward tasks can be handled by current models, scaling to handle more complex problems will follow soon. However, researchers at Apple recently published a paper that finds some AI models do not handle complex situations well, failing above certain levels of complexity.

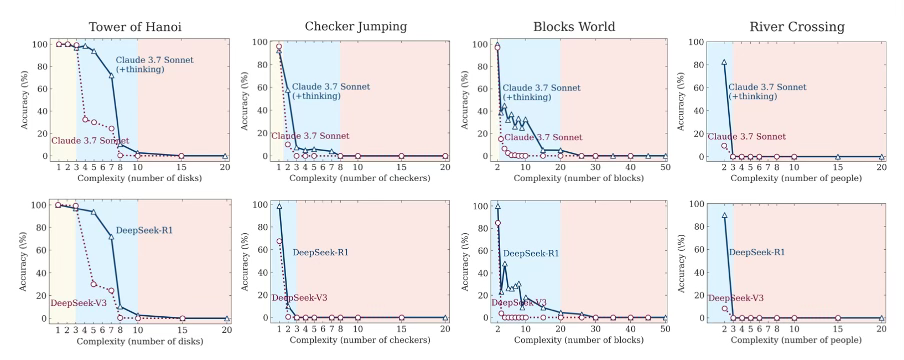

The researchers tested several Large Reasoning Models on four relatively simple puzzles – the Tower of Hanoi, Checkers Jumping (swapping positions of coloured tokens), River Crossing (transporting different numbers of people, animals & plants across a river), and Blocks World (where stacks of blocks are rearranged). The puzzles can be solved by young children with practice (up to a certain point of difficulty).

These Large Reasoning Models are advanced generative AI models intended to handle more complex tasks, and to show their reasoning. But the researchers found that they were not able to reason effectively above certain levels of complexity. For example, in the Tower of Hanoi models started to fail when there were more than four disks. The models all over-think easy problems, and under-think harder ones.

The study authors found that the model performances were neither logical nor intelligent when the puzzles became too complex. Like many humans the algorithms gave up reasoning after the task got too difficult, reverting to guesses rather than logic.

Some commentators have suggested that the paper may be cover for Apple’s poor success in developing "Apple Intelligence."

However, Gary Marcus, a well-respected AI researcher, notes that he and other researchers have for some time pointed out the limitations of Large Language Models, and this research supports that. He notes that well-designed conventional algorithms can already solve such puzzle problems, so it highlights constraints of the reasoning models.

Marcus emphasises that developing artificial general intelligence (if that is feasible)

“… shouldn’t be about perfectly replicating a human, it should (as I have often said) be about combining the best of both worlds, human adaptiveness with computational brute force and reliability.”

He is not a sceptic of AI, but an advocate for developing a variety of AI approaches and models, and recognising every method and technology has limits.

“Whenever people ask me why I (contrary to widespread myth) actually like AI, and think that AI (though not GenAI) may ultimately be of great benefit to humanity, I invariably point to the advances in science and technology we might make if we could combine the causal reasoning abilities of our best scientists with the sheer compute power of modern digital computers.”

Sean Goedecke is critical of hot takes on the Apple paper that claim the models can’t reason. He points out that the paper shows that the models can reason, but only up to certain thresholds of complexity. When they can’t compute all possible steps they try and find shortcuts and fail. We shouldn’t, he says, be surprised by this.

Other researchers found that current Large Language Models fail when given difficult mathematical reasoning tasks. They made errors, missed steps, and made contradictions. This was due in part to how the models developed their optimisation strategies when being trained.

It’s important to note that both studies looked at narrow cases of reasoning (a few puzzles, and hard maths problems). And some of the performance challenges these two studies report are likely to be reduced over the next few years.

Just as AI hype overestimates AI abilities, we should be cautious of overestimating their limitations too. It is just as easy, and misleading, to dismiss the potential of AI as to see it as unlimited. As AI models evolve and improve we need to grow our own critical thinking so we can use them appropriately, and regulate them and the companies that develop them effectively.

Very useful, thank you Robert. I especially liked these two cut through observations, quote:

1. These views are I think a combination of uncritical techno-optimism, political influencing, and calls for investment.

2. AI is a very hungry beast. Not just for data, but also investment and regulatory leniency, so boosterism and hype can be a core economic and political strategy of AI companies.

Tony Brenton-Rule